Futures take the form of a promise. Technoscientific futures even more so—they project a future built around a specific technological object. The “Making Media Futures” panel offered an example of this practice with four talks focused around four different promises: (imagining) the perfect girlfriend, the merging of minds and machines, airports before the existence of airports, and technoscientific imaginations of the nation-state Turkey. How are these technoscientific promises articulated? What forms do they take? Our EASST panel, titled “The power of technological promises: quantum technologies as an emerging field”, explored the power of these visions through quantum computing and information (QC). We argued that QC offers a useful example, particularly because the field has yet to deliver on any of its promises. Therefore, it offers social scientists a window into how actors construct institutions, narratives and ideologies in real time, as well as how these narratives shift according to the needs of an audience, field, or other factor. The emerging quantum sciences are, thus, an area of contestation for shifting techno-economic relations on the international level. No quantum computers fulfilling the promises made by the field exist, nor will likely exist in the next decade or so. The same is true of concepts like the quantum internet and fully-secure quantum communication.

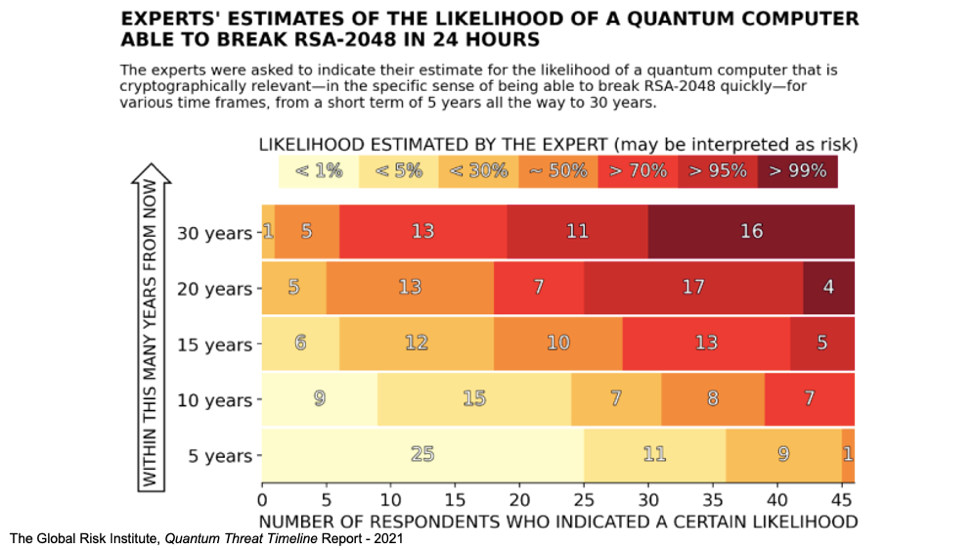

The paper by Zeki Seskir on post-quantum cryptography offered an account of this landscape. His paper explores post-quantum cryptography and the claim that quantum computers will be able to break the Rivest–Shamir–Adleman algorithm (RSA), the means through which internet communications are encrypted. He touches on the extensive work of the US National Institute of Standards and Technology (NIST) in planning for this future. This promise of encryption-breaking has spawned not only the new field of post-quantum cryptography, which Seskir discussed but moreover a class of consultants to prepare companies to adapt to quantum computing. This class of consultants is not Seskir’s focus per se, but their emergence demonstrates how seriously industry takes the promises of QC, and how complex the institutions built around it have become.

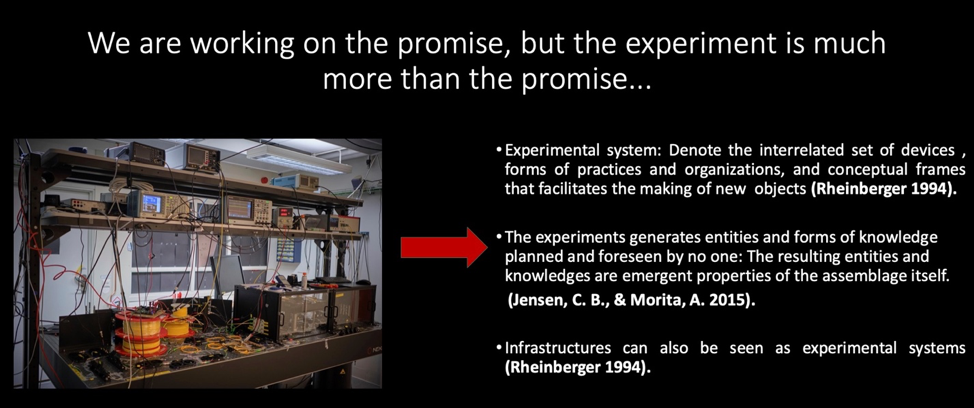

Another paper presented at our panel, coauthored by STS scholar Camilo Castillo and electrical engineer Alvaro Alarcón Cuevas, examined the relationship between the promise and products of quantum communication, where Alvaro himself is an active researcher. The authors asked how scholars and broader society do and should evaluate the field’s successes, or lack thereof. Their paper offers a tactic for handling the overwhelming power of technological promises: to shift focus away from them. They argued that “the experiment is much more than the promise” and that “we miss things when we are only fixated on the promise,” (Alracón and Castillo, 2022). They cite Jensen and Morita’s 2015 paper, “Infrastructures as Ontological Experiments,” which argues “experiments generate entities and forms of knowledge planned and foreseen by no one: The resulting entities and knowledges are emergent properties of the assemblage itself” (Jenson and Morita 2015). Alracón and Castillo frame tacit knowledge as an example of the products of experimental research which are not outlined in the explicit promises of the field. This is also the stance of some of the scientists I have interviewed in the field of QC who stress that no one knows exactly what technologies may emerge from QC research. Perhaps this approach offers an alternative to the historical reasoning on display more publicly in the field. Many actors in the quantum computing world try to have it both ways; by invoking Castillo and Alarcón’s argument that the field is valuable outside of its explicit promises for certain audiences, and permitting or encouraging others to equate quantum computing’s future and classical computing’s past at the same time.

How do you fund a promise? How do you build and maintain institutions around a technology which does not yet exist? How do you craft a credible technoscientific future? This was the central question of the papers on our panel. My paper touched on the role of history-writing in technoscientific infrastructure. As a historian, in the course of my fieldwork with QC practitioners, I was struck by how much time the scientists spent telling histories to each other and to general audiences.1 I was further struck by how much importance they placed on the disciplinary practice of history. They wanted me to write their histories the right way, by which they meant in a way that corresponds to their experience. The scientists were very well-read and opinionated about the history of science literature written about their disciplinary backgrounds. They felt compelled to write their own histories to some extent because they had experiences that caused them to believe historians of science had talked only to self-promoters and had thus inscribed the history of the field—and therefore the field itself—incorrectly.

When examining US and EU government documents on QC, I found they were filled with histories; histories of science, of the US state, of technology, and of thought. Often, they were filled with histories relayed by scientists and engineers. Our panel had an example of this as well in Alarcón’s participation, though unlike the scientists and engineers in my paper, he worked closely with Castillo, a social scientist. In his essay “Society in the Making,” Michel Callon famously argues that the engineers he studies are, in fact, sociologists. They act as sociologists in their work designing and getting buy-in for the technological assemblages that they build (Callon 1987). Callon, furthermore, argues that disciplinary sociologists ought to learn from engineer-sociologists (Callon 1987). In the same year, Bruno Latour wrote an account of how scientists make and remake the grounds of politics in his book The Pasteurization of France (Latour 1987). What should we, as social scientists, make of this usurpation? Perhaps disciplinary categories are aspirational, creating distinct cultures within those boundaries, but not actually cordoning off practice.

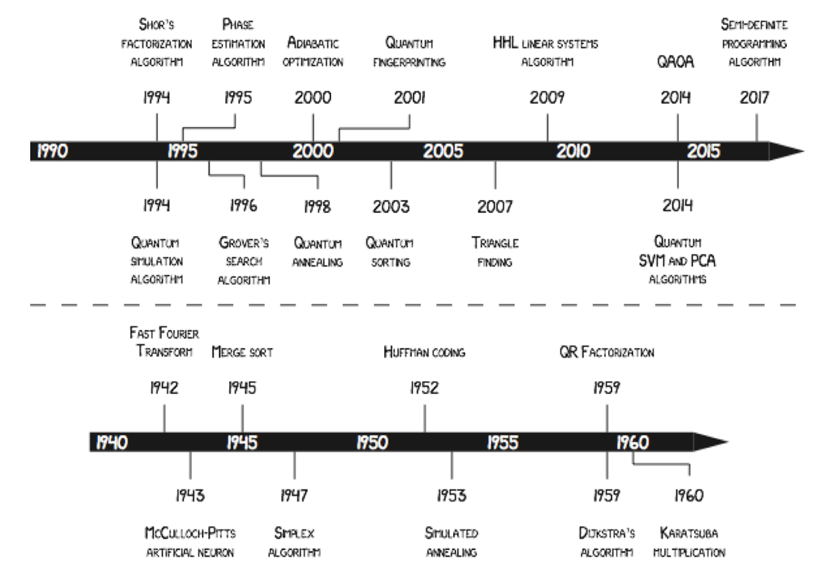

Callon and Latour, taken together, can offer an explanation of how scientists make credible technoscientific promises. They do so by taking on the role of social scientists in order to make and remake the grounds of politics. My paper looked at how a network of international, mixed industrial, and academic scientists made the promise of quantum computing credible, into an overdetermined future, and how they convinced US government and industry actors to fund and build institutions for the field. I found through my research that the structure of the promises made by QC relies on scientists’ ability to integrate the field into other histories of science and technology—for example, into the histories of computing. Being integrated into the history of computing allowed QC practitioners to use preexisting infrastructures built by and for classical computing practitioners. Scientists in this context act as historians whose histories have great impacts, guide policy and practice, create and recreate a number of scientific fields. The histories they recount enlist other figures (e.g., government bureaucrats, scientists from other disciplines, or industry figures) into their network and make claims about the stakes of their project. Through this practice, they make and remake the grounds of politics.

Not incidentally, these scientists (especially early practitioners in the field) are acutely interested in pedagogy and re-making the general public’s understanding of the world. By making insistent claims about the nature of reality—for example, the assertion that ‘the physical world is quantum mechanical’–they attempt to re-engineer nature and the human subject—explicitly writing and rewriting the past, future, reality, and the subjects who experience it as such (Feynman 1982, 467-71).

Whether or not historians of science want it—Daston claims that, unlike STS practitioners, historians of science would rather not—histories of science act on the world and guide policy (Daston 2009). One of my central arguments is that these scientist-histories are important to institution-building and planning, as well as anticipating the future. By anticipating the future, I mean preparing the ground for it, using Michelle Murphy’s definition of anticipation (Adams and Murphy 2009). In this case, historical reasoning is the epistemology of futures in the sense that quantum scientists apply these histories in order to excavate institutional and imagined space for their project; scientists present these histories as means through which society can anticipate the future.

The kind of historical reasoning on display by scientists relies on a crucial slippage between description and prediction. Take, for example, a central narrative of ‘tech’, Moore’s Law; the belief that devices will keep getting steadily smaller, cheaper, and faster (Fuchs 2015). Many see this as a quasi-law of technological development. It is not true that every actor takes Moore’s Law to be predictive. However, the narrative that filters into mainstream culture is that the law is almost a law of nature. Regardless of actors’ belief in the status of the law, many use it to make predictions for a variety of reasons. Moreover, progressivist histories like Moore’s Law often do not feel they have to offer an explanation of this historical trend, leading later readers or consumers to see them as laws of history. Historians of science may think we are writing descriptions of the world, but others will always use it as prediction.

Actors are primed for these progressivist histories because, as Daston shows in her article “The History of Science & the History of Knowledge,” historians of science and others tie histories of science to progressivist histories of civilization in order to explain the difference between ‘western civilization’ and the rest of humanity and thereby to justify western domination (Daston 2017). Progressive histories were adapted in the post-Cold War to account for lingering inequalities by asserting a relationship between ‘technology’ and economic difference: technological development became what distinguished the good outcomes of the economies of wealthy countries from the poor outcomes of the economies of the rest (Latham 2000). These techno-economic narratives account for our societal obsession with histories of technology and produce the substrate from which these histories of science and technology, told by scientists, wield such power. They likewise encourage the slippage between description and prediction. In themselves, these histories of science, and technology have become a form of reasoning– a predictive epistemology whose product is credible futures.

During the Q&A, we fielded many questions about when QC will replace computing and what stage in the historical development of classical computing QC had reached; for example, audience members asked if QC was still at the pre-silicon transistor phase or if we were closer to seeing computers which would replace classical computers (quantum computers will likely never replace classical computers).

These questions recall an oft-repeated narrative about how QC is the second coming of computing; that it represents a recapitulation of the information technology revolution which brought consumers personal computers, the internet, and more. In the course of my research, I have found that narrative is so powerful that managers in the US government and computing industry2 believe the field will precisely reenact major computing milestones in a similar if not the same timeframe as classical computing. Likewise, as for example Seskir’s presentation demonstrated3, actors believe it will even have same constituent parts (transistors, repeaters, internet etc). Actors anticipate quantum as computing 2.0 or information technologies 2.0 to the extent that US intelligence agency reports worry that the technology is so overdetermined it may deter progress. Once again, description has become prediction and history has become the future. There is a good reason that this is the case; Histories justify funding, industrial planning (by entities like the US national security state) and eventually lay the groundwork for industry involvement. QC practitioners, in particular by suggesting their project is an extension of the larger project of computing, are able to overcome barriers to their projects’ realization through this mechanism.

One question raised by all of this discussion is: what is the proper relationship between STS, history of science and the sciences? What promises do we make to each other and to the public? Castillo and Alarcón’s presentation offers a potential model. They had a close relationship and collaboration predating this paper and because of that were able to synthesize a valuable and interesting answer to the problem of how to deal with technological promises in practice: to spend more effort elaborating the societal benefits of these fields outside the technoscientific, product-focused, promises they make to potential funders. Perhaps scientists, historians and STS practitioners should reimagine the boundaries of our fields and collaborate on writing the kinds of histories that matter—the kinds of histories that acknowledge they act on the world as well as describing it.

1 They even introduced me to one practitioner as the ‘family historian’ of the field.

2 This probably extends beyond the US but most of my research has been with US sources.

3 This way of thinking about quantum technologies is pretty ubiquitous. Seskir’s presentation offered some interesting examples of this tendency.

Bibliography

Adams, Vincanne, Michelle Murphy, and Adele E. Clarke. “Anticipation: Technoscience, Life, Affect, Temporality.” Subjectivity; Basingstoke 28, no. 1 (September 2009): 246-7.

Callon, M. Society in the Making: The Study of Technology as a Tool for Sociological Analysis. Cambridge, MA: The MIT Press, 1987.

Daston, Lorraine. “Science Studies and the History of Science.” Critical Inquiry 35, no. 4 (2009): 798–813.

Daston, Lorraine. “The History of Science and the History of Knowledge.” KNOW: A Journal on the Formation of Knowledge 1, no. 1 (March 2017): 131–54.

Feynman, Richard P. “Simulating Physics with Computers.” International Journal of Theoretical Physics 21, no. 6–7 (June 1982): 467–88.

Jensen, C. B., & Morita, A. (2015). Infrastructures as Ontological Experiments. Engaging Science, Technology, and Society, 1, 81-87.

Latham, Michael E. Modernization as Ideology: American Social Science and “Nation Building” in the Kennedy Era. Electronic resource. New Cold War History. Chapel Hill: University of North Carolina Press, 2000.

Latour, Bruno. The Pasteurization of France. Cambridge, Mass: Harvard University Press, 1988.

Fuchs, Erica. DARPA Does Moore’s Law: The Case of DARPA and Optoelectronic Interconnects. State of Innovation. Routledge, 2015.